Improving Safety and Velocity at Robinhood with our Deployment Platform

Ann Rajan and Kumail Naqvi are Software Platform engineers.

Over the past year, we rolled out a deployment platform that deploys builds generated by our engineers and acts as the management console that sits on top of our Archetype Framework — this platform makes code deployment safer and faster. Our deployment platform has been critical to supporting the exponential growth of our business. For some historical context, we introduced the Archetype Framework in 2019, in a blog post where we outlined how we embraced Kubernetes to tackle deployment challenges and our experience onboarding applications onto the Kubernetes platform.

In this post, we’d like to bring you up to speed on what we’ve done since 2019, and how we’ve continued to leverage the Archetype Framework to deploy applications more efficiently. We’ll walk you through our deployment architecture; its components and capabilities, and what we’re looking to do next to increase developer velocity.

Deployment Platform Architecture

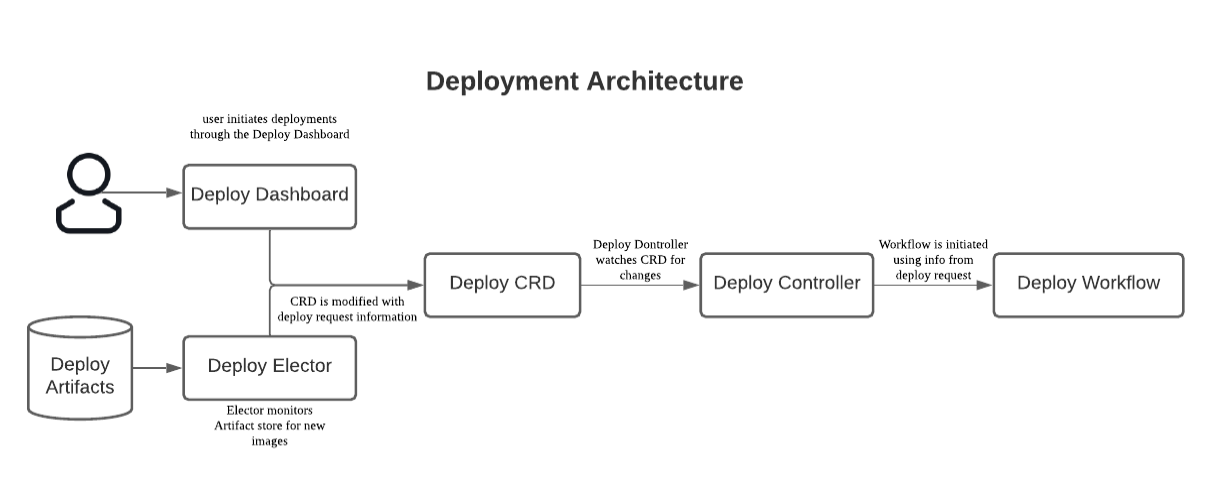

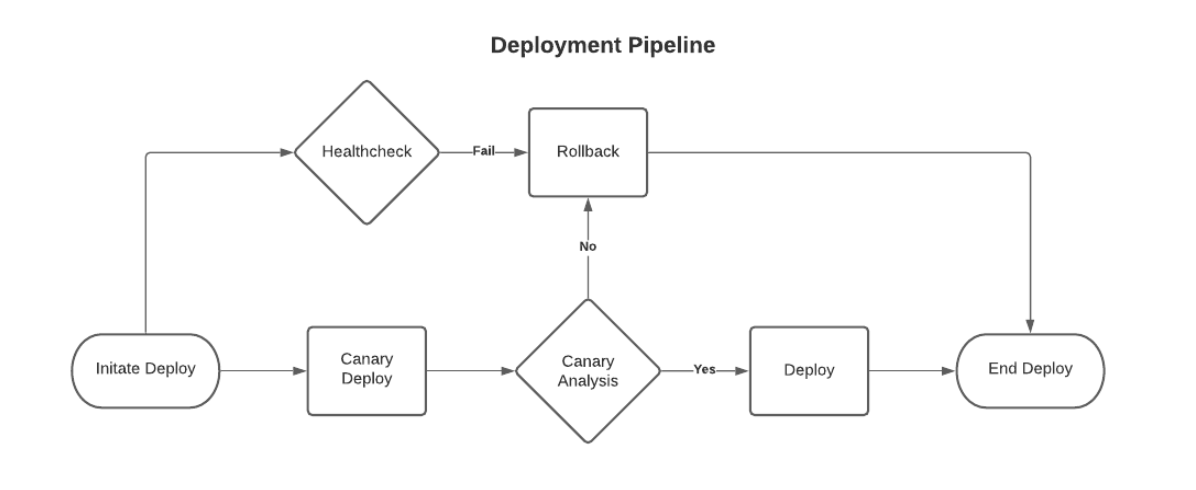

Our deployment platform detects new builds and allows engineers to safely deploy those builds. This process features a canary deployment and canary analysis to compare the performance of new code with data from a stable version, and a healthcheck to monitor the condition of the application. In the case of any failures, the deployment will automatically rollback to the last healthy version of the application.

Four main components define the application deployment process at Robinhood:

- Deploy Dashboard

The dashboard is the centerpiece of the system, it gives us a bird’s eye view of all the projects currently in production. We custom built an internal web application that provides a much needed web interface for Kubernetes, and our abstractions on top of Kubernetes with the Archetype Framework. The Deploy Dashboard facilitates interactions with our Archetype Custom Resource Definitions and allows engineers to view and manage their applications running on Kubernetes. It also gives application owners the ability to deploy applications and monitor those deployments. This interface removes the need for most application owners to interact with Kubernetes using the command line, which ultimately removes the risks associated with directly running kubectl commands.

The dashboard shows which components are part of a given application across different Kubernetes clusters, (each component maps to multiple Kubernetes native resources). From the Dashboard, you can do the following: run common kubectl commands, scale the number of pods in an application, and view logs within containers of their corresponding pods. The dashboard allows engineers to deploy a commit, by capturing that deployment information in the Deploy Custom Resource Definition (CRD).

- Deploy Elector

The Deploy Elector is a standalone application that continuously monitors our build artifact repository for new application artifacts. Once it identifies a candidate, it will update the Deploy CRD with the relevant information. The actual deployment is then scheduled through the Deploy Controller by an engineer.

- Deploy Operator

The deploy operator consists of two things, a Custom Resource Definition and a corresponding Controller. The Deploy Controller monitors the Deploy CRD, which holds information about an application’s deployment state. Either the Deploy Elector or the Deploy Dashboard will update this CRD object with a Deploy Request containing the deployments metadata such as the user that initiated the deployment, the version to deploy, and the current time, among other items. If the Controller sees that a new Deploy Request has been created, it will create a new deployment workflow to initiate deployment.

- Deploy Workflow

A diagram of the deploy workflow is shown below. The pipeline is codified as a DAG, a directed acyclic graph. We use Argo, an open-source workflow engine, to execute a set of steps for deployment in the workflow, where we use conditionals to determine which step the workflow should do next.

After a deployment has been initiated by the engineer, the workflow will immediately deploy to canary, which will direct traffic from the new version to a small portion of users. Next, it will start canary analysis, where the current production metrics are compared to the new canary metrics. If canary analysis returns a positive signal, the workflow will promote the rest of the application to the newest version. During all of this, the health check monitors the overall condition of the application. If one of these steps fails at any point, the deployment will automatically rollback to the last version.

Advanced Features

- Event logging — We wanted to create a robust way to preserve deploy history and monitor any manual user actions given the increase in deployments. For this, we leverage events already captured by the Kubernetes API server, and store them in a separate database. This makes every action and log related to a deployment easily accessible through the dashboard.

- Multi cluster support– Each one of our applications lives on multiple Kubernetes clusters in order to ensure redundancy and safety if one of our clusters is affected. We’ve implemented a feature that allows our engineers to deploy the same code to multiple clusters at once, greatly reducing the possibility of inconsistent versions of an application running across different clusters.

- Deploy Locking — We built a feature to allow Kubernetes cluster admins to lock deployments for all applications, which is needed in case there’s maintenance work going on in the cluster or if the cluster is overloaded and going through with a deployment may be unsafe.

Key Takeaways

Since we introduced our new deployment platform, code deployments are faster and more stable. Instead of one bundled omnibus deploy per day, we’re now rolling out numerous deployments each day across our ~150 applications. For context, we only had 10 applications on the Archetype Framework when we published the first iteration of this blog back in 2019. Since then, we’ve experienced a substantial shift in how how we work in three key ways:

- Increased Developer Velocity and Production Safety

- Sophisticated Analysis System Integrated with ML

- Improved Visibility Into Our Deployment Performance

Our Code Deployment Wishlist

Our work is still not done. In the future, we’re focused on enhancing our existing deployment capacity across several areas:

More advanced machine learning for canary analysis

We also want to build more intricate and advanced machine learning models for our canary analysis service. Ideally, the burden of making sure a new application version is behaving as expected would shift from the application developers over to our machine learning models.

Expand the scope of deploy platform

The current iteration of our platform is designed to help engineers manage their deployments and get a general overview of their application. In the future, beyond adding more operational features, we want to expand this to become a hub for Robinhood engineers to monitor their application health, easily make configuration changes, and use it as a unified management platform for their applications.

Visit our careers page to learn more about working with them at Robinhood.

All investments involve risk and loss of principal is possible.

Robinhood Financial LLC (member SIPC), is a registered broker dealer. Robinhood Securities, LLC (member SIPC), provides brokerage clearing services. Robinhood Crypto, LLC provides crypto currency trading. All are subsidiaries of Robinhood Markets, Inc. (‘Robinhood’).

© 2021 Robinhood Markets, Inc.