Scaling Robinhood Crypto Systems

Authors Chirantan Mahipal, Hefu Chai, and Xuan Zhang work on Crypto Engineering at Robinhood.

In 2021, we witnessed a significant increase in crypto trading volume since the debut of the Robinhood Crypto product in 2018. The exciting growth challenged us to scale our systems to better support our customers.

In this blog post, we dive into how we scaled our crypto systems, starting from the need to support hypergrowth in traffic, followed by an extended effort to align on our long-term crypto strategy.

Initial efforts

Our crypto backend is built on the Django framework with PostgreSQL databases. With the increased traffic, we noticed that the primary database and its read replica became the performance bottlenecks. The surge of database CPU usage and replication lag at times poses high risk of degradation of product experience.

To better scale our systems, our infrastructure and product teams got together and decided to make these optimizations: reduce database loads, conduct load tests and size the demand and prioritize critical flows.

Reduce database loads

After we audited each query made on every request, we identified that we needed to:

- Eliminate redundant queries.

- Redirect certain queries to read replicas, to offload the primary database.

- Avoid cases where multiple expensive queries were made to calculate certain values in hot read paths, we started to store these pre-calculated values during write time.

This audit also helped us improve the write throughput for certain critical flows by up to 50% and the read throughput by about 4x.

Various app flows are designed with a costly polling mechanism to always fetch the latest data. After identifying certain expensive read requests (the responses don’t often change and can tolerate certain staleness) we used a cluster of memcache nodes to cache them. To ensure correctness, we never used caching on the write paths. Even a short caching duration drastically reduced the number of queries that the database needed to serve.

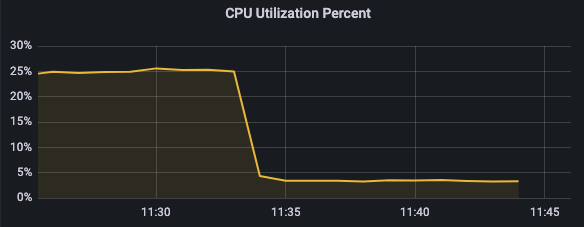

These improvements led to 7x CPU usage reduction, and cut the queries made per request down to 1 from >30 queries for some endpoints.

So far so good.

Improve database schemas

To measure the cost of every query being made to the database we used tools like pganalyze and Jaeger. For finer details of the query plans for expensive queries, we used EXPLAIN ANALYZE. Understanding query costs and plans helped us to identify the correct columns to add indexes to for optimal performance improvements. Adding indexes sometimes reduced the query time by 1000x on certain common queries. By seeing what those queries were costing, we were led to eliminate expensive JOINs operations where possible. Last, we unified the Android, iOS and Web front-end platforms implementations to take advantage of the database optimizations.

Conduct load tests and size the demand

We conducted load testing with our in-house framework, to measure the load our system could handle. The framework allows us to replay the recorded production read traffic with a scale factor. To understand the breaking points for our primary database, due to write traffic, we put together a system that synthesized artificial user orders on a staging environment to mimic the production. Through these tests, we built insights into the scalability of our systems, and then scaled the infrastructure accordingly.

Prioritize critical flows

To prepare for overloading, we built a load shedding and throttling mechanism. It allowed us to keep critical flows functioning with the saved capacity by temporarily turning off certain less business critical features. To reduce the blast radius, we separated order flows into standalone servers and pgbouncer connection pools. Fortunately, our substantial improvements on the scaling front never required us to use these mechanisms.

Longer-term solution

After working through these traffic peaks with the multiple rounds of optimizations, our Crypto team embarked on a journey to enable the crypto backend to scale horizontally, with the goal of preparing the backend for higher needs of scalability.

Given that the single database server had become the bottleneck, we evaluated whether to directly shard the database or to shard the whole stack from application layer to data layer.

Deciding on how to shard

One thing we considered was a previously successful sharding effort, from our brokerage system, which had similar server architecture and performance bottlenecks. Because it aligned with our priority for reliability and provided more service isolation, reducing the blast radius of potential failed subcomponents, we determined that sharding the whole stack was the way to go.

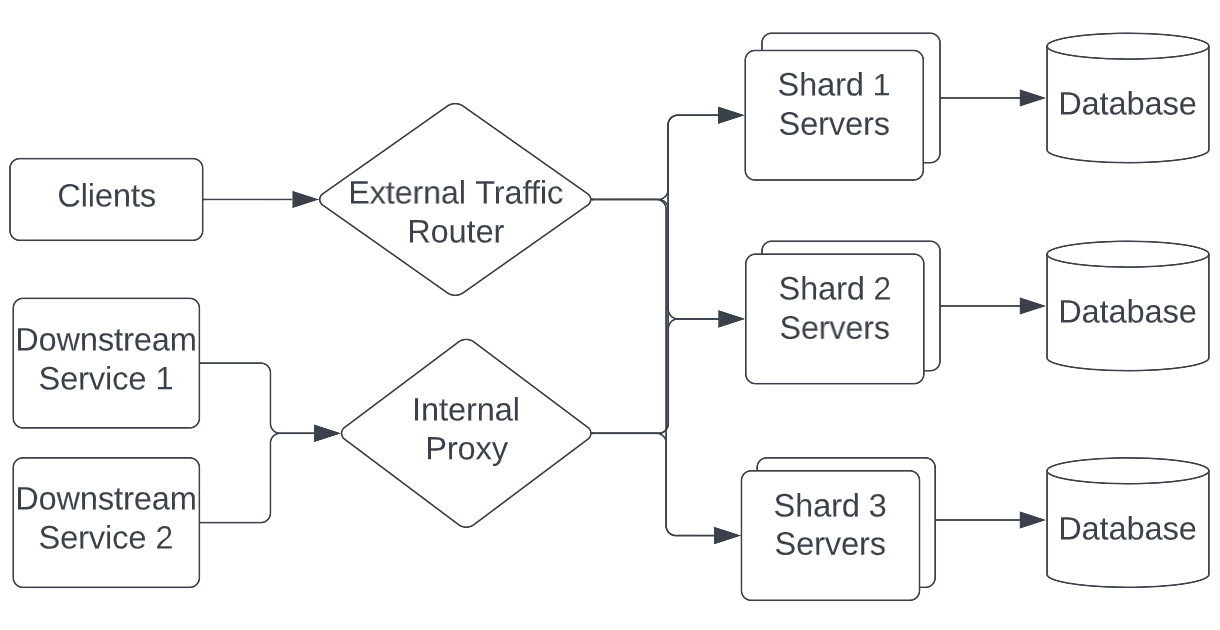

A new physical shard would consist of a suite of standalone servers, daemons, cache and database. The architecture would involve three major changes:

- Build the shard management components.

- Change the backend and downstreams to be multi-shard compatible.

- Split the monolith.

Building shard management components

To properly route the external traffic, we added a public load balancer routing layer to determine the shard that the customer resides on, based on the record within a request, and route the request accordingly.

For internal traffic, to hide the underlying shard details from downstream services, we set up an internal API proxy that sat in front of the shards — this stateless server routed requests to appropriate shards, and aggregated their responses without exposing the infrastructure setup.

Changing backend and downstreams to be multi-shard compatible

To ensure a smooth transition into a multi-shard system, we made a series of refactorings across the system, including making the identifiers globally unique, limiting kafka consumers to only process the messages that belong to their shard and properly handling the data across shards in the data lake.

Splitting the monolith

Besides serving as the front-office authorization system for trading crypto assets, the crypto backend system does a series of jobs, including performing the settlements against our execution counterparts.

We extracted settlements into a standalone component and database, which allowed us to disentangle the less latency-sensitive component from those with stricter latency requirements. By sharing the settlement component across shards, we saved on the operation overhead of duplicating the setups when adding a new shard.

With the sharding architecture rolled out, the team spun up a new shard that was completely isolated from the old one, and started to take on its share of load by hosting the majority of newly registered customers.

What’s next

The Robinhood Crypto team is committed to continuously scale our systems to provide the best user experience, and to improve engineering efficiencies. These are our immediate priorities:

- Balance loads among more shards: To maintain a healthy customer-per-shard ratio, we’re now bringing up more shards and balancing the number of users among shards. The objective is to reduce the scaling stress while limiting the blast radius of potential system failures.

- Benchmark our system: While our backend system is doing the recurring read load testing, we’re setting up the write load test in an isolated environment. These will let us consistently benchmark the system, detect regressions in a timely manner and identify bottlenecks when the system is under high load.

- Reduce operational cost: To address operational overheads, we’re investing in tools and automations such as: streamlining the processes of spinning up a new shard, making the cross-shards manage efficiently and safely with tooling, together with the tooling provided by the Load & Fault team, it’s easier to replay test suites, compare benchmarks and identify system bottlenecks.

- Manage infrastructure cost: In addition to allocating more infrastructure resources to help with scaling, we need our systems to be cost effective, so now, we’re partnering with Robinhood Capacity Planning team to find that balance.

When hypergrowth in trading volume occurred, we successfully scaled the Robinhood Crypto system to provide our valued customers with what they needed when they needed it.

This supported our vision to make Robinhood the easiest and most approachable place to invest and use cryptocurrencies.

All trademarks, logos and brands are the property of their respective owners.

Robinhood Markets Inc. and Medium are separate and unique companies and are not responsible for one another’s views or services.

© 2022 Robinhood Markets, Inc.